Perplexity AI: Retrieval-Based Intelligence and System

Perplexity is presented as a retrieval-augmented generation system built on Perplexity Sonar that combines live information gathering with model-driven reasoning to produce cited, source-linked answers. The architecture emphasizes evidence-based responses and structured research support: external content is fetched and ranked through a retrieval pipeline, then interpreted by a reasoning model to generate concise summaries and annotated outputs. The system’s workflow focuses on traceability of claims, explicit source references, and modular steps that separate retrieval, aggregation, and citation assembly to support reproducible research-oriented interactions.

What Is Perplexity? Core Design and Retrieval Workflow

The platform is centered on multi-stage retrieval pipelines and index-based search that feed a reasoning layer. A user query initiates web or index lookups, returning candidate documents and passages. Those candidates pass through aggregation and ranking stages that select evidence by relevance, recency, and signal strength. The selected material is then provided to a generative model that synthesizes findings into a coherent answer while assembling citations that point directly to source excerpts or URLs. Major components that shape system behavior include retrieval stages, aggregation layers, reasoning passes, and a citation assembly module that maps generated claims to explicit sources.

How Perplexity Processes Queries

Query processing follows a structured flow: (1) the input is normalized and expanded into retrieval requests; (2) search systems query live web indexes or internal databases to collect candidate documents; (3) ranking algorithms score candidates by relevance, freshness, and contextual fit; (4) an aggregation layer extracts salient passages and builds a condensed evidence set; (5) a reasoning model interprets the evidence and composes an answer; and (6) a citation assembly component links statements to specific sources or snippets. Each stage emits metadata—such as provenance, relevance scores, and timestamps—that supports traceability and allows downstream workflows to inspect contributing material.

Research-Oriented Features and Output Format

Outputs are designed for research use and typically include linked sources, structured summaries, and short explanatory breakdowns. Answers often present a concise lead, followed by a numbered or bulleted list of supporting points tied to explicit citations. For deeper queries, the system can produce follow-up interpretations, methodical comparisons of sourced claims, and extractive snippets that show the original context for each citation. Metadata such as publication date, author, and URL are surfaced alongside claims to assist assessment of evidence quality and temporal relevance.

Key Capabilities and Technical Characteristics

The system’s capabilities derive from combining indexed retrieval with model reasoning. Core technical characteristics include retrieval-driven reasoning that grounds generated text in sourced material; rapid aggregation of multiple documents to form concise syntheses; and citation mapping that attributes claims to explicit snippets or links. The design supports data-heavy tasks by passing structured evidence sets into reasoning passes rather than relying solely on parametric model memory. This separation enables clearer provenance, easier verification of claims, and the ability to refresh answers with up-to-date web evidence. The platform handles exploratory topics, technical questions, literature surveys, and factual lookups by prioritizing relevant passages and assembling source-linked summaries.

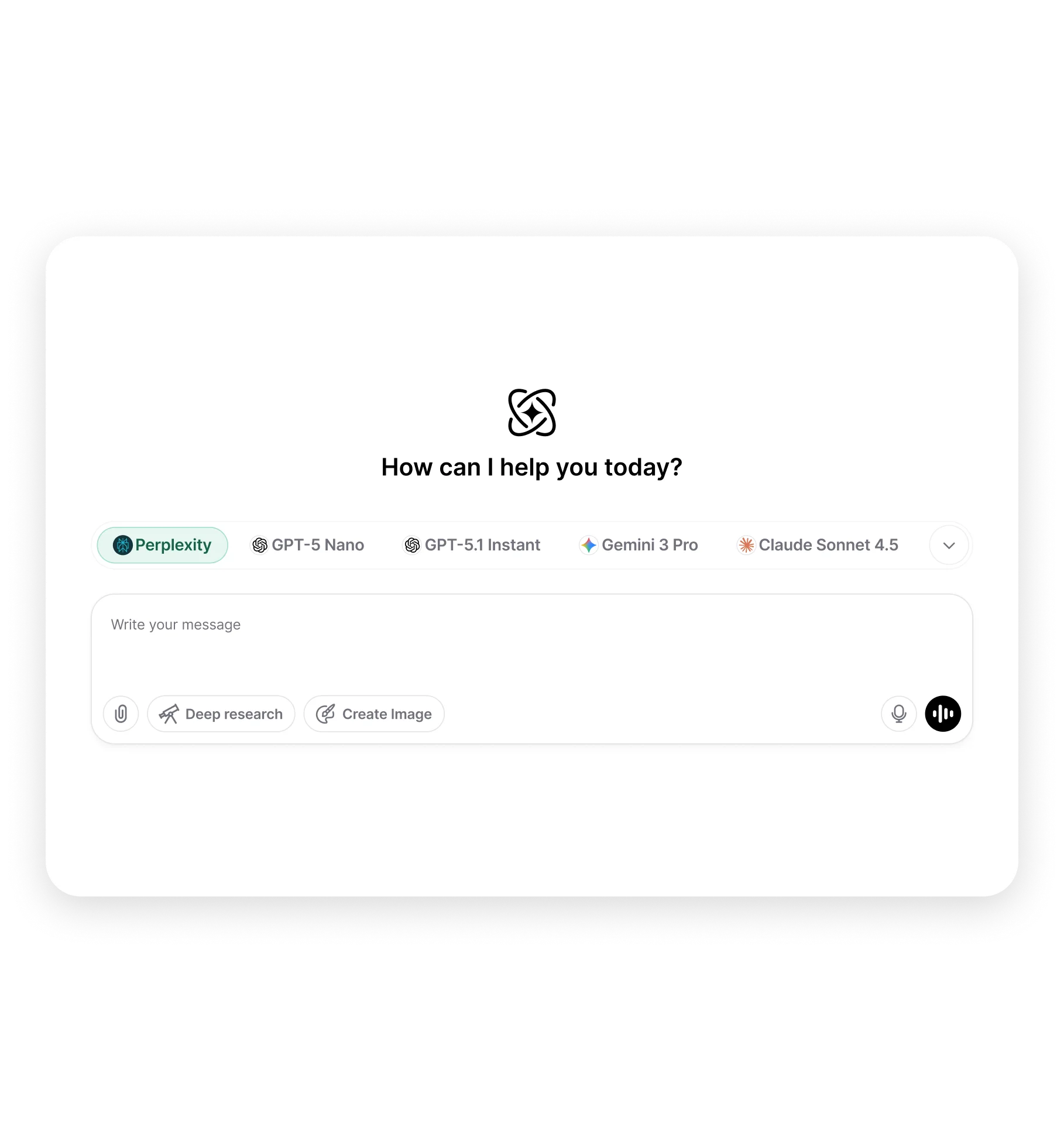

Using Perplexity-Inspired Workflows Inside Chat & Ask AI

Within Chat & Ask AI, retrieval-enhanced behaviors appear when tasks require factual lookup, evidence-supported summaries, or document interpretation. The workflow typically involves selecting an appropriate model, submitting a query or uploading documents, and receiving structured outputs that include summaries and source links. For web-based lookups, the system performs retrieval and ranking steps and returns a cited synthesis. For document-focused tasks, uploaded files are parsed and passages are selected to form an evidence set that the model uses to answer targeted questions. Outputs include citation metadata and extractive snippets to allow verification and further exploration.

Frequently Asked Questions

What is Perplexity AI?

Perplexity is a retrieval-augmented generation system built on Perplexity Sonar that integrates live search with model reasoning to produce cited, source-linked answers.

How does Perplexity generate cited answers?

The system retrieves candidate documents, ranks and extracts salient passages, synthesizes those passages with a generative model, and assembles citations linking claims to specific sources or snippets.

How does retrieval-augmented generation work?

Retrieval-augmented generation supplies a model with externally retrieved evidence at query time; the model uses that evidence as input to produce grounded, source-referenced outputs rather than relying solely on stored model parameters.

Does Perplexity rely on real-time information?

answYes. The retrieval pipeline can query live web indexes and online sources to incorporate recent information and timestamps into the evidence set.er

What types of tasks are supported?

Tasks include factual lookups, literature surveys, technical summaries, data-centric queries, document Q&A, and exploratory research that benefits from explicit sourcing.

How does Perplexity process long or complex queries?

Long or complex queries are decomposed into focused retrieval requests; relevant passages are aggregated across sources and passed into reasoning passes that synthesize a multi-part, source-linked response.

Does Perplexity support multimodal inputs?

The architecture can be extended to handle document uploads and media-derived text (for example, transcripts). Support for non-text modalities depends on the specific ingestion and parsing components in use.

What affects availability or response speed?

Response speed depends on retrieval latency, query complexity, ranking and aggregation workload, and the chosen model’s processing time; network conditions and the freshness of indexes can also influence availability.