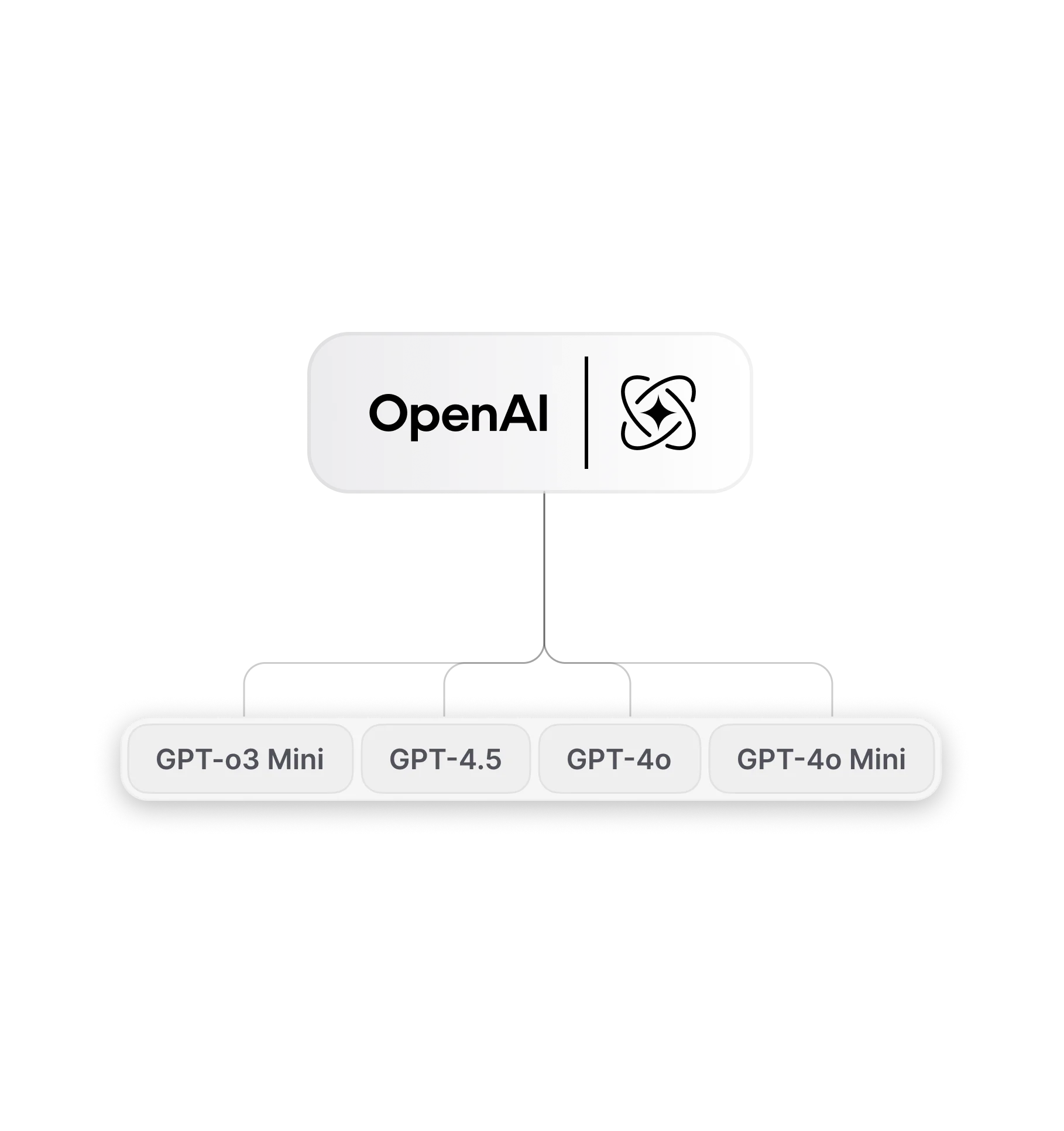

Chat & Ask AI: Developed on OpenAI Models

Ask AI, your access point to advanced AI solutions developed on OpenAI's most advanced models. The platform smoothly incorporates the technologies developed on GPT-5.2, GPT-5, GPT-4o, and GPT-o3 mini, bringing you unmatched abilities in text-based chats, problem-solving, and making creative content. Let's explore how these powerful AI technologies can change your daily tasks and work projects.

Advanced AI Models: Built on GPT-5.2 & GPT-5.1

The OpenAI family inside Chat & Ask AI consists of large architectures developed on advanced training infrastructure. Deployments include variants powered by GPT-5.2, powered by GPT-5.1, developed on OpenAI o3, built on GPT-4o, powered by GPT-4o-mini, and built on GPT-4.1. Each variant targets specific technical needs: some focus on high-precision reasoning and long-context processing, others handle multimodal inputs such as images plus text, and certain versions prioritise low-latency, cost-effective interaction for high-volume queries. Selection and runtime parameters are set to match task profiles while keeping consistent input/output conventions.

Model Roles and Technical Positioning

Reasoning-heavy workflows route to models built for extended context and step-based analysis.

Creative and generative tasks use models developed to handle expressive text and multimodal prompts.

Coding and debugging requests progress to models powered by focused code-understanding capabilities.

Fast conversational exchanges are handled by models powered for rapid-response operation.

Capabilities and Input Handling

Models accept plain text prompts, uploaded documents, code snippets, and images. Long-context inputs use segmented context windows and summarization strategies to preserve continuity. Multimodal inputs combine visual and textual data into unified representations, enabling captions, analyses, or integrated outputs. API-style usage supports structured prompts, system instructions, and return formats suitable for downstream processing or human review.

Key Capabilities Across the Model Lineup

Core abilities common to the OpenAI family include structured reasoning for multi-step problems, code generation and interpretation, multilingual output, and multimodal comprehension. Performance attributes arise from model architecture, training data scope, and task-specific fine-tuning. Implementations provide reproducible outputs, configurable temperature and length controls, and support for common developer and content workflows.

Reasoning, Coding, and Complex Task Handling

Models break complex tasks into discrete steps, apply internal chains of reasoning, and can include intermediate explanations. For code queries, models produce example snippets, inline comments, and refactoring suggestions. Analytical requests may return tabular summaries, calculated results, or structured plans for review and iteration.

Multimodal and Long-Context Abilities

Multimodal capabilities let models interpret images alongside text, extract key details, and merge those signals into coherent responses. Long-context handling relies on hierarchical summarization and sliding-window context methods to keep relevance across extended documents or multi-turn conversations, supporting tasks such as document summarization and extended debugging sessions.

Using OpenAI Models Inside Chat & Ask AI

Within the Chat & Ask AI interface, model selection and task setup follow a consistent flow. A model choice can be specified before submission, or the system can suggest a suitable variant based on task type. Inputs may be typed, pasted, or uploaded. Outputs return as readable text, code blocks, or media assets depending on the request and model capability.

Interaction Flow and Supported Input Types

Text prompts: natural-language questions, instructions, or structured prompts.

Documents: PDFs, Word files, and other text documents for summarization or extraction.

Code: snippets for review, testing, or generation.

Images: uploaded visuals for captioning, analysis, or context-aware responses.

Chained interactions are supported so model outputs can feed subsequent prompts for refinement.

Availability, Stability, and Access Notes

Model availability follows platform status and access policies. Throughput and response stability can vary with system load and selected variant. Status indicators show maintenance windows or transient interruptions. Access controls and authentication determine who may select advanced variants; error handling and retry guidance support interrupted operations.

Frequently Asked Questions

What are the OpenAI models available inside Chat & Ask AI?

Available deployments include variants powered by GPT-5.1, powered by GPT-5, developed on OpenAI o3, built on GPT-4o, powered by GPT-4o-mini, and built on GPT-4.1 for different task profiles.

How do these models handle text, images, or other multimodal inputs?

Text and images are combined into unified internal representations, then processed by task-specific decoders to produce summaries, answers, or media outputs.

Do OpenAI models on Chat & Ask AI have usage limits or context constraints?

Yes. Each model has configured context window limits and rate controls that set maximum input length and request frequency.

How can users access or switch between different OpenAI models?

Model selection is available in the interface before submission or via settings; some workflows may auto-select a variant based on task type.

Are OpenAI models suitable for tasks like coding, analysis, and content generation?

Yes. Specific variants are configured for code understanding, analytical reasoning, and content creation workflows.

Is there a free way to use OpenAI models inside Chat & Ask AI?

A basic access tier offers introductory usage of some model variants subject to platform terms and limits.

How does pricing work for OpenAI models when used through the platform?

Pricing varies by account tier and selected variant; detailed billing information appears in account settings.

What should users do if an OpenAI model appears unavailable or unresponsive?

Check the platform status indicator, retry after a short interval, and contact support if the issue continues.

How do the models handle safety, accuracy, and reliable output generation?

System prompts, content filters, and post-processing checks are applied to reduce unsafe outputs and flag uncertain responses.

Can OpenAI models be used for image creation or other creative tasks?

Yes. Certain variants can generate or assist in creating image outputs and other creative media when configured for generative tasks.

What types of projects benefit most from the OpenAI models available on Chat & Ask AI?

Projects involving document analysis, code assistance, multilingual content, and multimodal content workflows commonly use these model variants.