AI Detector: How AI Detection Works?

AI detectors are tools made to assess whether content likely came from a machine learning model or from a human. They evaluate written material—short messages, essays, articles—and visual content such as generated images and photos. The purpose of these tools is to provide likelihood assessments that help reviewers, editors, and platforms understand probable origin without asserting absolute certainty.

Detect and Improve AI-Written Text

With the rise of generative AI, we need tools that promote transparency in writing and editing. The Ask AI AI detector and AI text detector offer a straightforward way for individuals or teams to tell the difference between human-written material and AI-generated text.

What Is an AI Detector? Core Function and Evaluation Principles

An ai detector is typically a classifier trained to recognize differences between model-generated and human-produced content. For text, classifiers analyze token probabilities, sentence transitions, repetitive structures, and stylistic regularities that often appear in outputs from large language models. For images, detectors examine pixel patterns, edge consistency, noise distributions, and model-specific artifacts that can signal use of diffusion or generative systems. These systems produce probability scores or classification outputs indicating relative likelihood. Results should be read as indicators rather than definitive judgments. Human review remains important when outcomes affect academic integrity, legal matters, or publishing decisions.

Paste your text into the detector box

Run the AI check with one click

Review the results and detailed reports

Detection of Text, Essays, and Written Content

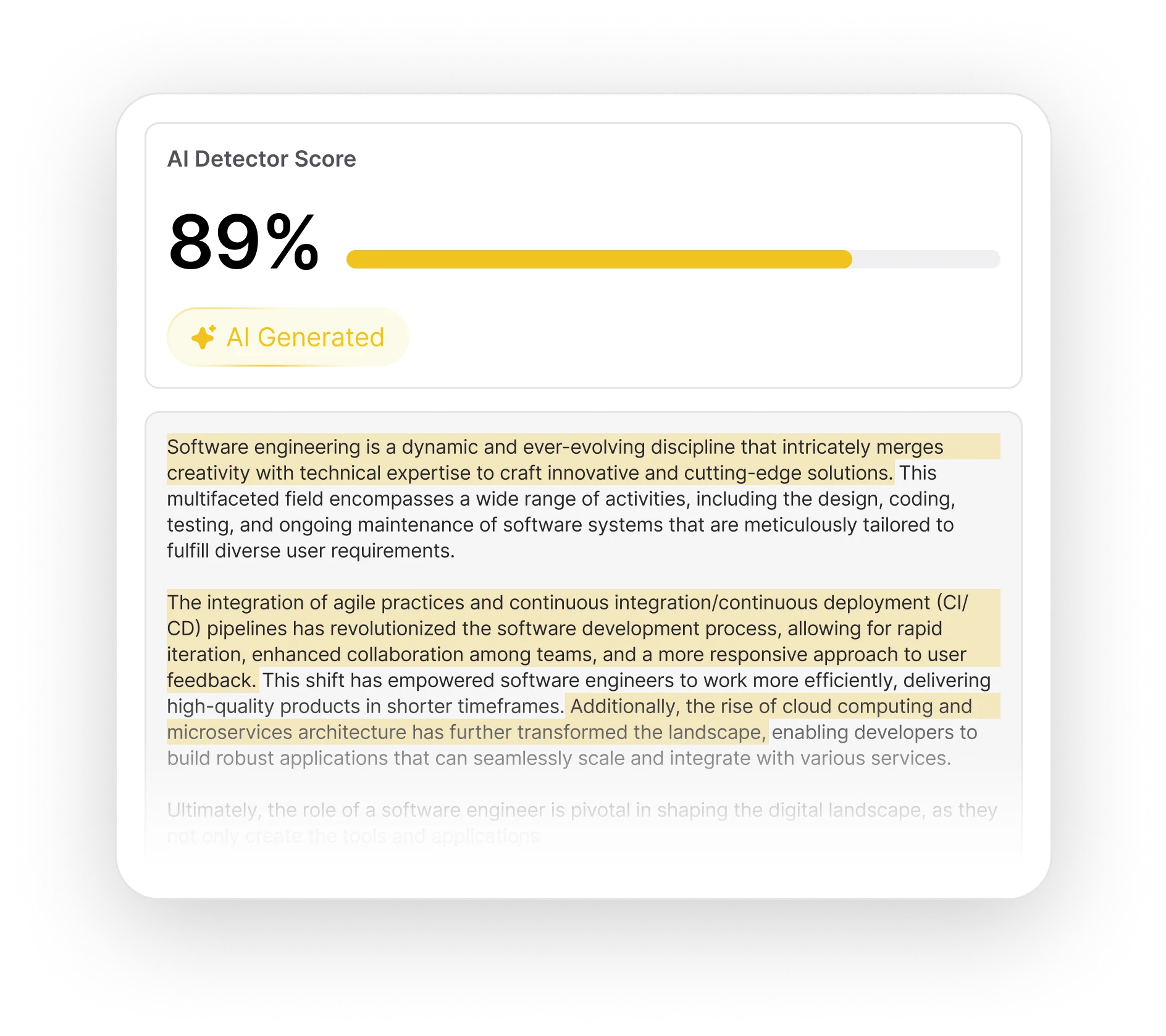

Text detection uses statistical and stylometric signals. Classifiers compare token-level probability distributions with known model behavior, flag repetitive constructions, detect limited lexical variety, and measure coherence patterns. Essays receive added checks for structural markers—uniform paragraph lengths, predictable topic transitions, or repeated phrasing—that may differ from typical human drafts. Detection models may combine language-model fingerprints and stylometric comparisons to produce a ranked likelihood that a passage was generated by an AI.

Instant text analysis with a simple copy-and-paste

Detailed reports for regulatory or editorial checks

Regular updates to keep up with the latest AI language models

.webp?alt=media&token=b6c4db9b-838d-4c54-9300-a8403e03f7c8)

Use Cases: Who Should Use the AI Detector?

The AI essay detector and AI paper detector can be used to check essays, reports, and research papers from students at all levels. By accurately finding AI-generated text, educators can maintain fair assessments, discourage plagiarism, and support original student work, in line with educational policies.

Educators and academic institutions

Content creators and editors

Recruiters and HR teams

Businesses and marketing teams

Frequently Asked Questions

What does an AI detector check for?

Checks include token probability distributions, repetitive phrasing, and stylometric markers for text; and pixel-level artifacts, edge inconsistencies, or noise patterns for images.

Are AI detectors accurate?

Detection tools give probabilistic assessments. Accuracy depends on the model, content type, editing level, and dataset; results are indicators, not definitive proof.

Can AI detectors identify edited or human-mixed content?

Edited or mixed content often lowers detection confidence. Some signals may remain, but outcomes become less certain when human edits are substantial.

How do AI text detectors evaluate essays?

Essays are analyzed for token patterns, paragraph structure, vocabulary variety, and stylistic regularities; classifiers combine these signals into a likelihood score.

Do AI detectors work on images and photos?

Yes. Image detectors look for texture repetition, inconsistent edges, lighting anomalies, and model-specific noise patterns to estimate generation likelihood.

Why do different detectors give different results?

Differences stem from training data, feature sets, classification thresholds, and model architectures, which produce varying likelihood scores.

Can an AI detector say with certainty that content is human-written?

No. Detection systems provide probabilistic outputs and cannot offer absolute certainty that content is human-written.

How do AI detectors handle rewritten or paraphrased text?

Paraphrasing and rewriting can obscure generation signals; detectors may show reduced confidence or inconclusive results for heavily modified content.

Are free AI detectors reliable?

Free detectors can be informative but vary in training quality, feature coverage, and update frequency. Reliability depends on underlying models and evaluation methods.

How does AI detection work on Chat & Ask AI?

Chat & Ask AI analyzes submitted text and images with internal classifiers and artifact checks, returning score-based assessments and signal breakdowns to support human review.